Understanding the Swash Data Product Provider

Sending the data request can be done directly via the DPP Client SDK as well as through the sCompute portal where the user can generate a…

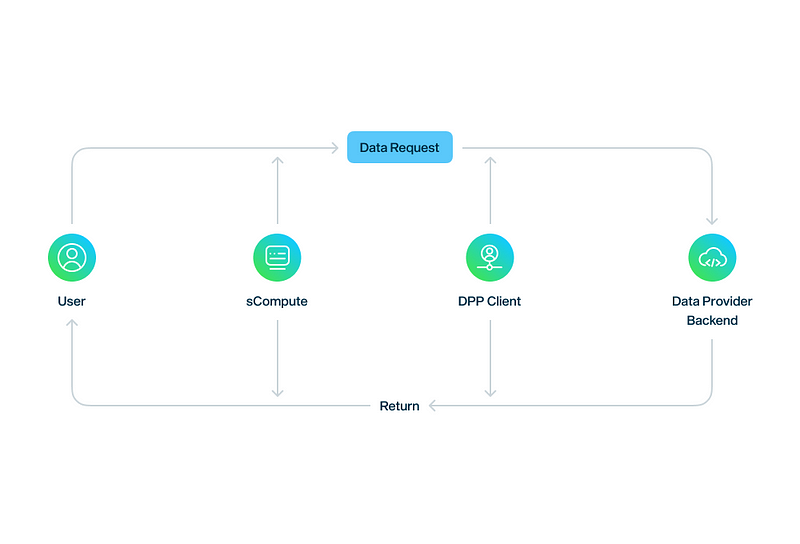

Sending the data request can be done directly via the DPP Client SDK as well as through the sCompute portal where the user can generate a request and send it. The sCompute portal uses the DPP Client SDK for communicating with the DPP backend and the purchase contract.

The Data Product Provider (DPP) is responsible for defining marketplace products and datasets. These include the query, target marketplace, and price. Users can send, view the list of, and buy a data request, as well as download the data after purchasing it.

Sending the data request can be done directly via the DPP Client SDK as well as through the sCompute portal where the user can generate a request and send it. The sCompute portal uses the DPP Client SDK for communicating with the DPP backend and the purchase contract.

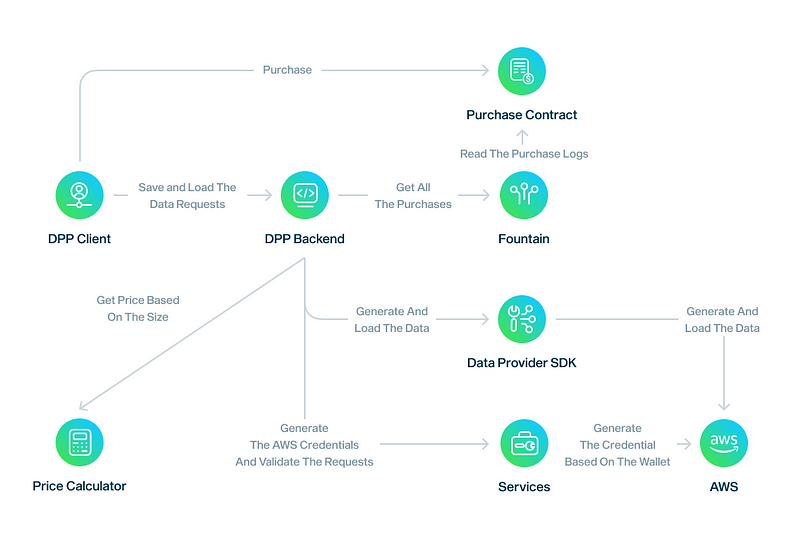

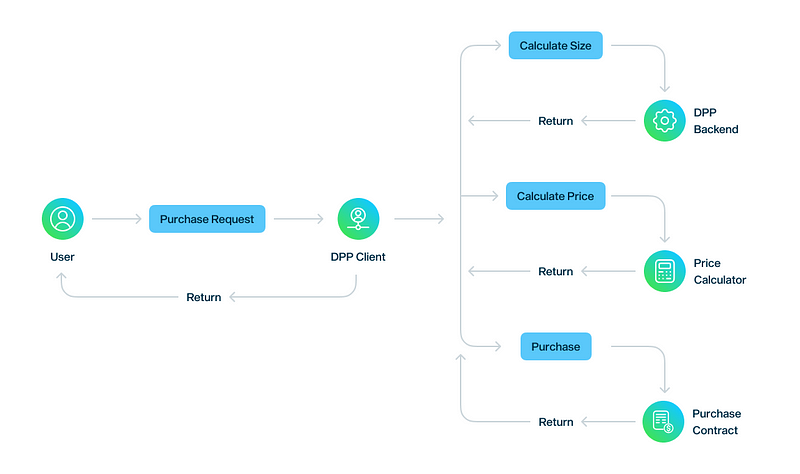

The user has two options — they can either call a DPP Client SDK API to purchase a request or they can use the sCompute portal. Before calling the purchase contract, the DPP Client SDK calls an API from the DPP Backend and obtains information on the size of the request, followed by another API from the price calculator module (by sending the size of the data). They then obtain the price and make calls to purchase the contract to buy the data.

The fountain module is an off-chain module that listens to the blockchain contract purchase event and saves all purchases to the database table. The role of the DPP Backend is to call an API from the fountain module and inform it about new purchases. After informing the DPP backend about the buying request, the status of the request is changed and a new job to prepare the data is created.

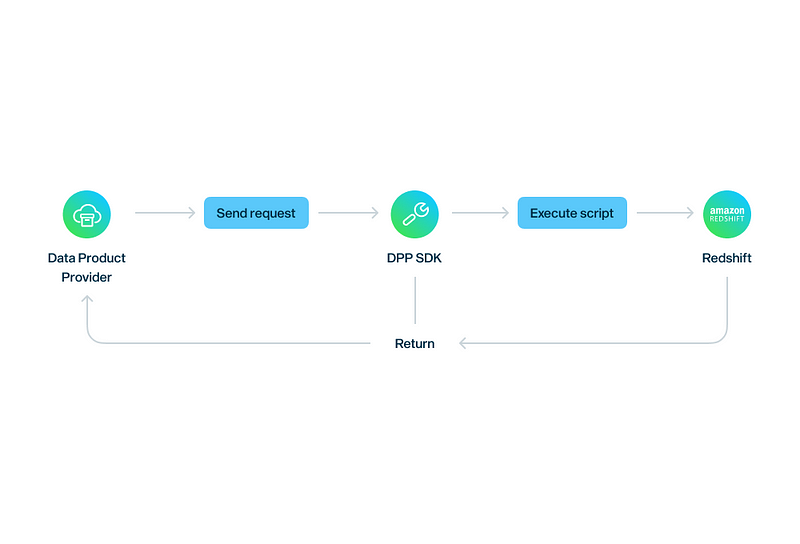

The final data must be generated at the AWS S3 path. All of the original data is saved in the AWS Redshift database and, when the job of preparing data begins on the DPP backend, the select script based on the user criteria is created. After generating the script, the backend uses the Data Provider SDK module to connect to the Redshift database, executing the selected script, and saving the result to a file in the S3 path.

The Data Provider SDK needs access to run the script on Redshift as well as to save the result as a file to the S3 path, but how can it do that?

There is a service module that is responsible for generating these credentials for each user based on the user’s wallet address, the DPP backend then calls the service API to obtain the credentials to then pass the credentials on to the Data Provider SDK. The Data Provider SDK then uses these credentials to communicate with the Redshift and AWS S3 paths.

When the user buys a request, it is possible to download the generated data file by using the sCompute portal UI or calling the download API of the DPP client. Additionally, the user can publish the data to several marketplaces, for example to the Ocean Marketplace, and sell this data.

Data Product Provider Components

The Data Product Provider has several components for creating data requests; checking the time of data created based on the data request, collecting the data from the database and saving the data to the specific path, and checking the status of providing data. The main components of this process are:

- Creating the executable script based on a JSON data model

When the executor job tries to find the jobs and execute them at the Redshift server, the first step is translating the user JSON conditions to the executable SQL script. This important process will be done by the query builder component. Based on the target database, it is possible to create a script for PostgreSQL, MongoDB etc.

- Update Purchase Job Scheduler

This job calls a fountain API and fetches the last data purchase, then changes the status of the data request to “PAYED”. Following this, a new job record is created.

The DPP Admin must configure the networks by a variable with the name of : “SUPPORTED_NETWORKS” for example if SUPPORTED_NETWORKS = 1,5,100 it means that the DPP must fetch all the new purchases of MAINNET, GOERLI and GNOSIS from the fountain. All the supported values for this variable are: 1,4,5,56,97,100,137.

- Data Creator Job Scheduler

After saving the Data Request, based on the type of the request, one job is created. The configurable scheduler checks all the jobs and if it is time to execute them, it creates the final query to be executed over at Redshift. For this purpose, Data Product Provider uses DPP SDK and through this SDK sends the request to the Redshift server and gets the response ID, and saves it to the job history.

The execution period of each job is configurable by DPP admin by setting the value for a variable with the name of “PROVIDE_DATA_EVERY”, for example if the admin set the value of PROVIDE_DATA_EVERY to 120000 it means that this job will be executed every 2 minutes.

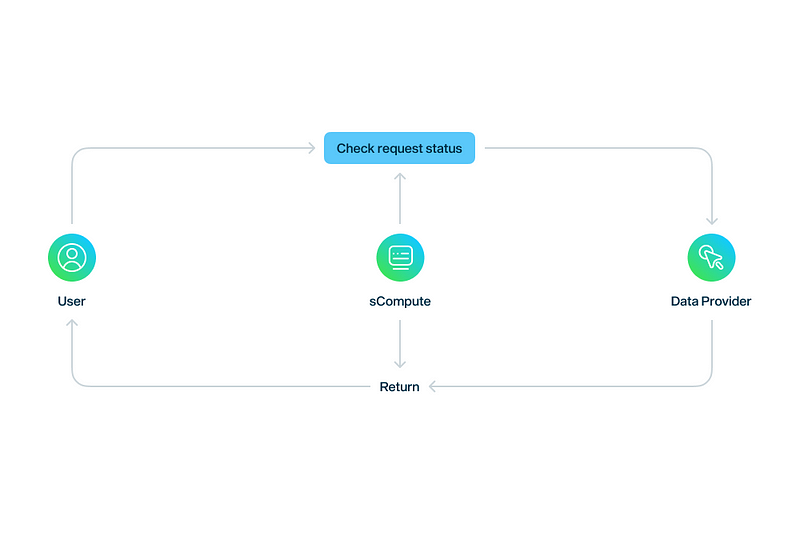

This is a yet another configurable scheduler that finds all unfinished jobs from the job history and checks the final status of them in the Redshift, consecutively updating the job status by the response of the Redshift.

The repeat pattern of this job can be set in the .env file in the variable with the name: “STATUS_UPDATER_JOB_CRON” The value of this field must be a Corn expression, for example, if the value of this is “10 * * * * *” it means that this job will be executed automatically at the 10th second of each minute.

- Data Provider SDK

This module helps the Data Product Provider to connect withe data, filter it, check the status of the queries, export, and save the result to the specific path. Data Provider SDK can connect to several types of Databases. For example MongoDB, PostgreSQL, Redshift, and MySQL. The DPP sends the request filtering criteria and the SDK translates it to the executable Script based on the type of the Database. Currently, the database type is Redshift.

- Fountain Module

This is an off-chain module that contains the purchase data and listens to the purchase contract events. The data product provider reads the fountain data frequently and prepares the request data in AWS after informing about the purchase.

- AWS Accounting and Request verification Service

A module that creates the token for an authentication request in DPP Backend. It also validates the requests and creates the AWS credential for the users.

- Price Calculator

After calculating the size of the data by the DPP Backend, if the user wants to purchase the data, the next step is calculating the price with the purchase module.

- DPP Client SDK

This SDK is responsible for translating the user data request to the database SQL script (currently AWS Redshift SQL scripts) and in turn, asking the data warehouse to execute the scripts, generate the final data as a file and save the data file (Currently in AWS S3).

Data Flow

The user can log in to the sCompute’s portal and create their own data request which will be sent to the sCompute backend server.

The sCompute backend signs the data with its key and sends the data to the DDP backend server. The DDP backend validates the data and saves it to the database. Based on the type of request, it can create a one time executable or a repeatable job.

The next step in the process of data creation by the DDP is creating a new SDK to send the data request to the DDP backend without the sCompute portal. With this SDK, each project can create and send its own data separately.

After saving the request in the DPP database and creating a suitable job for it, one job executor creates the final select SQL and executes this SQL script on the AWS Redshift server, saving the result in a file at the specific S3 path.

Save Request Flow

Purchase Flow

Check Request

Query Builder

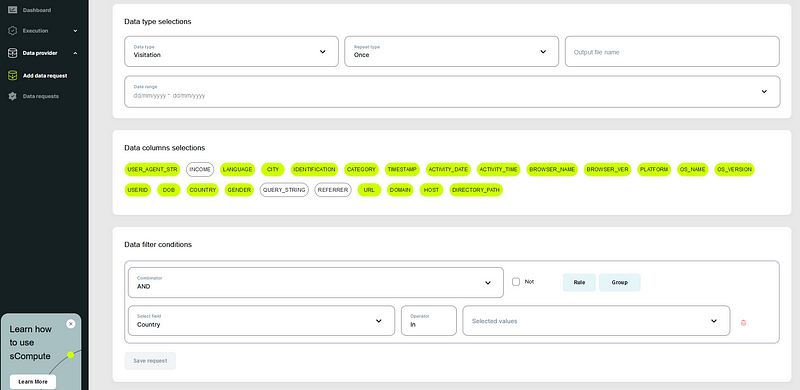

The Query Builder component helps the user to filter the data in order to send the data request. The query builder component has two responsibilities:

- Creating the request via UI

Users can filter the data by this component and at the end, one JSON object which contains all the user conditions is created. This JSON will be sent to the DPP database when the request is saved.

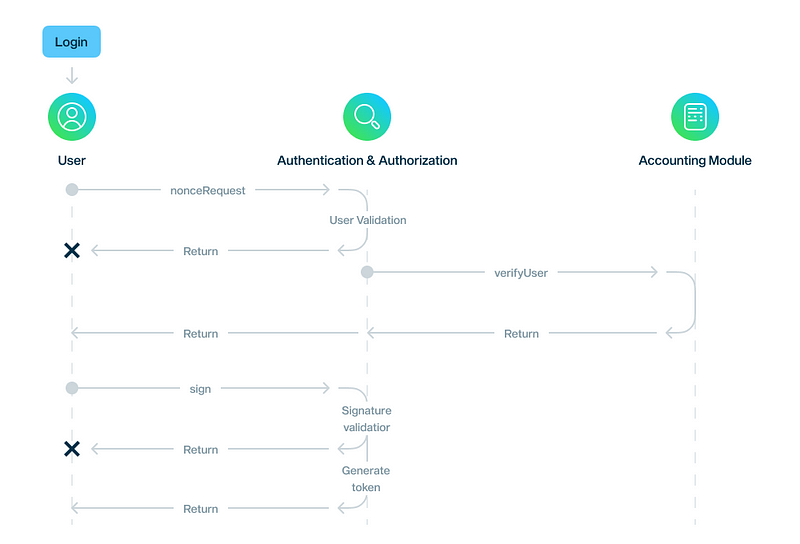

- Security Login flow

In this flow, a user is verified and authorised based on their wallet address and through using the related private key. At the end of this flow, a secure token containing their identity information is generated and used for session management of requests.

A valid client Ethereum wallet could be used for authentication. Which means that the unregistered wallet address of the client could be used for authentication and interaction. However, the signature generation, mentioned in the login flow, is done for each interaction.

About Swash

Know your worth and earn for being you, online.

Swash is an online earning portal where you can earn up to $200 per month for being active and completing tasks online. Whether you’re surfing the web, seeing ads, or sharing opinions, you deserve to be thanked for your efforts.

New ways to amp up your earnings are added to Swash every month!

Alongside the Swash earning dashboard, Swash consists of a wide network of interlinking collaborators including:

- brands that publish ads with Swash Ads

- businesses that buy and analyse data with sIntelligence

- data scientists who build models with sCompute

- developers who innovate on the Swash stack

- charities who receive your donations with Data for Good

Swash has completed a Data Protection Impact Assessment with the Information Commissioner’s Office in the UK and is an accredited member of the Data & Marketing Association.

Let’s connect:

Nothing herein should be viewed as legal, tax or financial advice and you are strongly advised to obtain independent legal, tax or financial advice prior to buying, holding or using digital assets, or using any financial or other services and products. There are significant risks associated with digital assets and dealing in such instruments can incur substantial risks, including the risk of financial loss. Digital assets are by their nature highly volatile and you should be aware that the risk of loss in trading, contributing, or holding digital assets can be substantial.

Reminder: Be aware of phishing sites and always make sure you are visiting the official https://swashapp.io website. Swash will never ask you for confidential information such as passwords, private keys, seed phrases, or secret codes. You should store this information privately and securely and report any suspicious activity.